ESDEP WG 5

COMPUTER AIDED DESIGN

AND MANUFACTURE

To review briefly the developments in computing generally and to describe the various ways in which computers can be used in the context of steel construction, with particular emphasis on design, drafting and modelling.

None.

Lecture 5.2: The Future Development of Information Systems for Steel Construction

The reduced cost of relatively powerful computing facilities has led to many activities traditionally performed by hand being performed with the aid of a computer. The improvements in computing which have largely enabled this development are reviewed.

The potential for using computers within the whole process associated with steelwork construction, from client brief through to construction on site, is described. General applications such as the use of wordprocessing, spreadsheets and databases are included, but the emphasis is on analytical and design calculations, and computer aided design (CAD). The distinction between 2-D drafting systems and solid modelling is discussed and the potential for transferring the data from a solid modelling system onto numerically controlled fabrication machinery is considered.

The ways in which computers have affected the various activities involved in steel construction have been led by developments in computing hardware, user environments, software and systems for data exchange. These developments in themselves have been interlinked, typically by advances in hardware allowing new possibilities for software development. However, not all advances for the end-user have followed this sequence; to a very large extent the development of user-friendly interfaces has gone on in anticipation of suitable computing facilities becoming available.

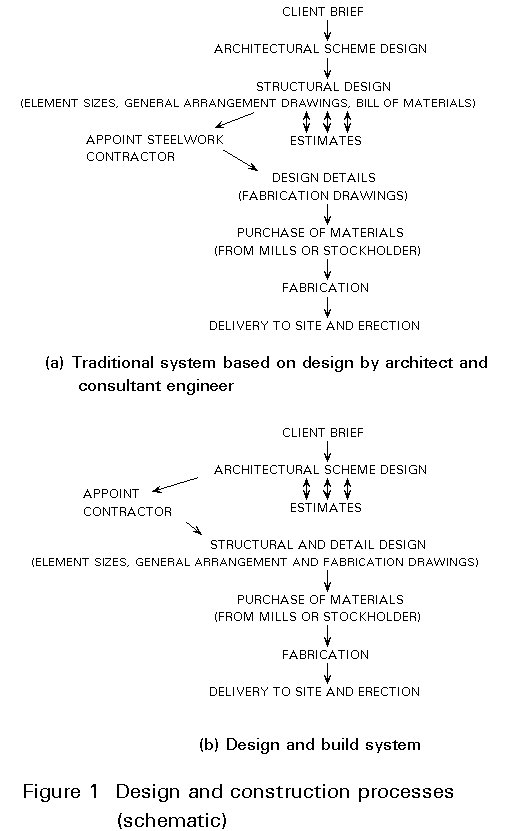

The computerised processes involved in computer aided design and manufacture (CAD/CAM) have to be integrated within the normal sequence of events involved in the inception, design and construction of structures (Figure 1). The process may be handled by a group of individual consultants on various aspects, together with a fabricator and contractor(s). Alternatively, it may be a "design-and-build" process in which one large organisation takes responsibility for the whole operation, even if specialist aspects are contracted-out of the parent company. In either case, problems of communication exist, and the degree of success in overcoming them is crucial to the success of the project.

Information Technology (IT) is largely concerned with efficient exchange of data and can be used to maximise the efficiency of all stages of the project. Although structural aspects are of primary concern here, it is assumed that all the specialist groups associated with a project consider themselves to be part of an integrated team. In this case, the facilities afforded by computerised systems for sharing data will be used, for example, to ensure that services can be fitted into the structure without any problems arising at a later stage in the contract, particularly on site. From the architectural point of view, it is also important that structural members do not obscure natural light from windows or the free flow of occupants within the building. Even in the structural steelwork context, there are areas where problems commonly arise; a typical one is where a consulting engineer has selected individual optimum-sized members throughout a building, giving the fabricator the problem of having to order small quantities of a large number of different sections and to design and fabricate different connections. Alternatively, consultants may themselves design connections which, although efficient in their use of material, cause extra fabrication cost which could have been saved by standardisation on a system which suits the fabricator's capabilities. These problems should, of course, never arise in any case, and the fact that they commonly do is essentially the result of inefficient communication between members of the design team.

During the initial tendering phase, the structural designers have to:

This stage clearly involves a great deal of work which may, after the contract is awarded, have been fruitless. From this point of view, therefore, there is a need to minimise the effort expended in a very risky endeavour. On the other hand, in the event of winning the contract, it is essential to reduce the amount of eventual variation from the tender specification, so this process must be carried out in a conscientious fashion. There is obvious scope at this stage for a relatively crude computerised approach to save a larger amount of employee-time in preliminary sizing of members, in production of tender drawings and in cost-estimating.

When the contract has been awarded, the successful design team is then faced with the need to:

In each of these processes the use of computers directly, using software, and to share data is an important aspect of ensuring that the building is constructed efficiently and works well.

Although it is natural in a lecture such as this to concentrate on the technical input of computerisation to the design and fabrication processes, it must be borne in mind that a significant part of the potential gain in efficiency in any complex multi-stage process can come from a suitable integration of normal office-automation software such as wordprocessors, spreadsheets and databases. Decisions about how data is shared and communicated, and how the total process is organised, can also make significant differences to its efficiency.

In this lecture it is assumed that the reader has only a general awareness of computers and their uses, and of the applications of automatic control to fabrication and manufacturing operations. The lecture gives, therefore, a general review of current computing and the routes by which computing has developed over the past 40 years or so. It is necessary to introduce and use some computer jargon, which is initially printed in italics.

Computing developments are subject to rapid advancement and, therefore, all such descriptions are valid only for a short period of time after they are written.

Mechanically-based digital 'computers' were first developed by mathematicians in the 19th Century. They were developed further only as far as the 'adding machines' and electro-mechanical calculators (sometimes analogue rather than digital) used in commercial, industrial and military applications until the mid-20th Century. They performed numerical computations much faster than could be done manually, but were limited by their large numbers of precision-made moving parts to fairly simple general arithmetic, or to unique tasks such as range finding for artillery.

The first electronic computers began to be developed in the mid-20th century, using radio valves as their basic processing components. These components were accommodated on racks and the computers thus acquired the title of mainframes. They generated large amounts of heat and efficient cooling and air-conditioning systems were always required. Early computers were unreliable because of the limited life of the thermionic valves and as the size of installations grew so did the probability of failure. The natural limit to the size of such computers arrived when a design was considered which employed so many valves that it was estimated by normal probability theory that it would average 57 minutes of 'down-time' out of every hour. Maintenance and operation of a computer required a large number of specialised personnel. Compared with the previous generation of mechanical devices, these computers were extremely powerful. Within industry they tended to be installed mainly for payroll and financial management, but in the research environment their development allowed the field of numerical analysis to begin to grow.

The development in the 1950's of transistors and in the 1960's and 70's of miniaturised integrated circuits (microchips) led to progressive improvements in the size, energy consumption, computing power, reliability and cost of computer hardware. This enabled a great diversification in the applications of computing and the machines which do it. The first of these developments was of mini-computers - relatively portable computers with sufficient processing power to perform tasks which had previously only been possible on mainframes. The central processor unit was typically accommodated within a cabinet which could be mounted on a trolley with the required peripherals and used within a normal office or laboratory environment. In comparison with mainframes, mini-computers had only modest technical support requirements. Their size reduced dramatically during the 1980's to the extent that their current descendants, usually known as workstations, are very similar visually to personal computers. Mainframes themselves developed into supercomputers, with the emphasis being on massive memory and data storage together with extremely fast processing. Supercomputers are now used to run huge database applications and numerical simulations of complex systems.

By the mid-1970's microchip technology had developed to the extent that significant computing power could be fitted within very small units - variously referred to as micro, desktop, personal or home computers. Initially, they had very low on-board memory, but were directly programmable from the keyboard in BASIC and could load programs from audio cassettes. The early microcomputer manufacturers each had their own operating system (or control program) and there was no possibility of transferring programs or data directly from one type of machine to another. There were also several types of processor chip in use, each with its own instruction set, so that even programming language compilers had to be rewritten for each type. A considerable step forward came when a common operating system (CP/M) was written for one family of processor. This system spurred the production of a large range of microcomputers between which programs were interchangeable. This process of standardisation has continued to the extent that at the time of writing there are only two major groups of personal computers used in business and professional environments; the IBM PC-compatibles and the Apple Macintosh. In the case of PC-compatibles, little more than the basic specifications are set by IBM itself and a huge worldwide industry exists to produce the hardware and software. No such 'compatible' manufacturing industry exists in the case of the Macintosh which, however, has a very strong software base in some areas, especially in graphic design and publishing.

Despite the current multiplicity of ways for presenting and storing information, a facility for obtaining hard (paper) copy of input data, program listing, results of analyses, graphics and documents is still very important. For alphanumeric output hard copy is most conveniently obtained using a printer. In this area also, there is now a considerable range of options, but the principal change in recent years has been from hard-formed character printers to raster (or matrix) printers of various types. The great majority of modern printers belong to the latter group, in which the output is formed from a matrix of dots which covers the print area in similar fashion to the pixels which form screen images. In black-and-white printing each of these dots is simply turned on or off to form the character shapes or graphical images, and the fineness of the printed output depends on how densely the dots are spaced. The method by which the dots are printed on the paper constitutes the main technical difference between one printer type and another.

The original mainframe lineprinters were based on similar principles to the typewriter, with hard-formed characters being struck via an inked ribbon onto the paper. These line printers can achieve high-volume text output at high speed, but are very limited in their ability to print graphics. Their smaller derivatives include daisywheel and thimble printers which suffer from the same limitation, and also from rather slow printing, although their text output is generally of a high quality.

Impact dot-matrix printers have been in use for many years and provide a relatively cheap system for producing output of reasonable quality for both text and graphics. A moving print head contains one or more vertical rows of pins each of which can be fired at the paper producing a single dot. Typical systems offer 9 pins in a single column or 24 pins in three offset columns. Draft output is produced rapidly by printing dots which do not overlap at all, while near letter quality (NLQ) is produced by simulating publishers' character fonts with arrays of overlapped dots. In simple 9-pin printers this is achieved by the print head making two passes over a line with a slight shift in position to give a denser, more precise image. Various fonts may be provided and a wide range of characters incorporated. Given the ability to control each pin of the print-head as it passes across the paper, it is also possible to print graphical images. These may be defined as bitmaps in which the image is stored as a continuous array of dots covering the whole print area and which may be sent to the printer as a simple screen dump which converts a screen pixel directly to one or more printer dots. Alternatively, vector images (such as engineering drawings) may be converted to bitmaps by software either at the computer or embodied in the printer.

Much more dense bitmaps can be achieved with laser printers, which deposit their dots electrostatically, in similar fashion to photocopiers. Although expensive, they offer excellent print quality, speed and flexibility (in terms of range of characters, fonts and print sizes). The high density of the matrix makes laser printers capable of printing high-quality graphical images as well as text. The cheaper inkjet printers, which project tiny individual droplets of ink at the paper from a moving print head produce output of almost comparable quality, but are less flexible and are much slower.

Most engineering drawings produced by CAD systems are stored as vector data (or drawing instructions). The pen-plotters which have been in use for many years basically have used pens to obey these instructions, acting very much as a mechanised draughtsman. The manufacturing technology of these plotters has developed to the extent that at the time of writing they still represent an economical way of producing large drawings at a reasonable speed, in multiple colours and with a variety of pen thicknesses. Since they are based on servo-motors there is no great penalty to be paid for increasing the physical size of the drawing space and the amount of plotting data sent and stored is merely proportional to the number of vector instructions on the plot. However, a dependence on moving parts limits their speed and precision of plotting. These plotters cover the complete range of paper sizes in use, from A4 to A0. Since their whole method of working is to move the pen in vectors across the paper (sometimes by moving the paper as well as the pen) their most economical use of text is to draw "simplex" characters rather than to attempt to simulate character fonts. For the same reasons they do not perform well when used to produce blocks of solid colour, for which they simply have to "shade" the area with huge numbers of strokes. Continuous, or automatic, paper feed is usually available on higher-priced models.

Electrostatic plotters, which derive from laser printers are increasing in use at the expense of pen-plotters. Since a high-quality dot-matrix image requires massive amounts of memory at the plotter to hold it, the penalties for requiring large paper size are at present considerable and these plotters can be very expensive. They are, however, very fast and accurate. It has already been mentioned that laser printers produce very high-quality plotted output and these represent a very much cheaper solution for a large amount of technical material for which the smaller paper sizes (A4-A3) are considered suitable. Inkjet plotters are also available at much cheaper prices than electrostatic and provide an economical way towards accurate colour plotting.

In batch-processing systems all information, including program code and input data, is supplied by the user before any processing begins. It can be done in a number of different ways. Early mainframe systems used punched paper tape or cards, which were cumbersome to edit and conducive to errors. They were superseded during the 1970s by magnetic tape and disk storage. In the case of early microcomputers the tape often took the form of audio cassette tapes which have now largely been replaced by the much more controllable floppy disks. They provide portable storage for a relatively large amount of data and, having been through several phases of development, have now settled for the present in the 3,5 inch format which is robust enough to be almost self-protecting against reasonable physical abuse. The so-called hard disks found on many current personal computers provide both quicker access and very much greater storage capacity than floppy disks, but are usually not portable between machines. Tape cassette systems (often known as streamers) are now largely used for making compressed backup copies of material normally stored on hard disks.

A form of data storage rather different from the magnetic systems mentioned above is compact disk (CD-ROM) storage. This is very much the same product as the CD's used for sound or video reproduction, and allows huge amounts of data to be held and rapidly retrieved, compared with the magnetic systems. CD-ROM is often included with personal computers used for training and information retrieval, because it provides a facility for mixing software, large information bases and video-quality graphics interactively. In some cases it is possible to write to CD as portable storage, but it is not possible to re-use the space on the disk once it has been written to, so that CD is considered as a write once read many (WORM) storage medium. However, where there is a need to produce, store and retrieve huge amounts of data, it is the obvious choice.

It is now fairly common to use scanners to enter text and pictures directly into a computer from paper copy. The key to this technology is not so much in the ability of the scanner device to input a picture of the sheet placed upon it, but in the character-recognition software which resolves individual character bit-images into normal printer font characters. For graphics, the production of a bitmap of a photograph or a line-drawing is fairly straightforward. Software which produces vector plot files from bitmaps of engineering drawings exists, although at the time of writing it is still under development. In either case, scanned input can still be fairly unreliable, given the problems which can be encountered with the original paper documents.

Direct interactive use of computers was not possible on the early mainframes, but it has progressively become the most effective method of use in most cases. Initially, dumb terminals were used so that users could type and send to the computer directly the kind of batch programming commands which had previously been read from punched cards. However, with mainframes two-way communication was slow since a large number of users might be sharing time on the central processor and data transmission rates were rather low in any case. It was only when communication and processing speeds had increased that interactive programs became possible. At this point, an executing program could be made to pause and request additional data or decisions from the user at the remote terminal, and to resume execution when this data had been entered. Results could be shown on the terminal or printed as a hard copy.

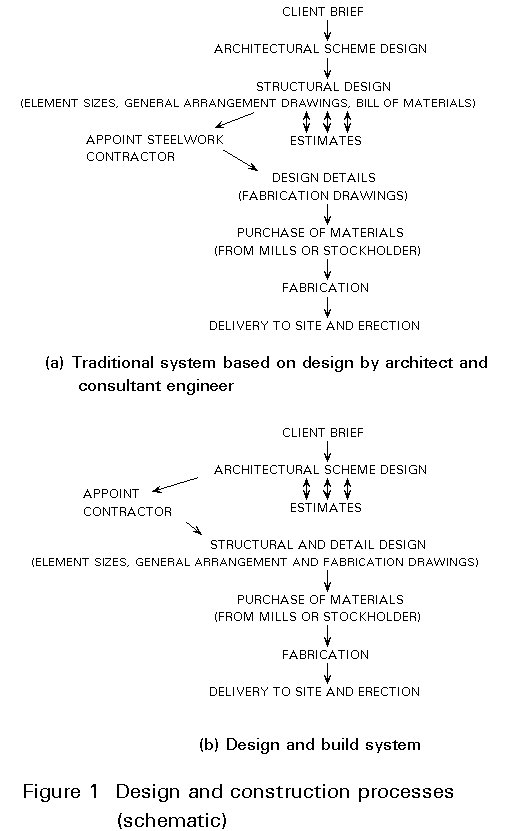

The use of dumb terminals has now largely been superseded by distributed computing. The personal computer itself has enough processing power and memory for most applications, so that communication with the central processor is not subject to time-sharing and truly interactive software is possible. Where access to software or data needs to be shared between numbers of users, computers tend to be attached to a network. In a network a number of computers, each of which uses its own processing power, is linked together (Figure 2) so that each has access to the others and, more importantly, each has access to a very large central filestore on which data and software is stored. This filestore is controlled by a "slave" computer known as the file server which generally runs the network. When a computer in the ring needs to use a particular program it loads the program from the filestore and runs it locally. Data produced by one computer can be held in a common database on the central filestore and accessed by others. Such networks are often provided with gateways to larger, national or international networks so that information can be shared by a large group of people. Even with a home computer the use of a modem allows a user to access the network via an ordinary telephone connection, thus providing a dial-in facility. This possibility obviously carries the implication that data needs protection against being corrupted by unauthorised users and, in some cases, confidentiality must be maintained. Various systems of password protection are used to attempt to ensure that network users do not have access beyond the areas in which they have a legitimate interest.

Computers are not the only devices which can be attached to a network. Most of the common types of peripheral (such as printers, plotters, scanners and other input/output devices) can also be attached. In the case, say, of a plotter the file server will control access to the device by queuing the output to it so that control is maintained. This queuing system can be applied to any peripheral device which can be attached to the network; in the context of a fabrication plant, it can be applied to a numerically controlled workshop machine for which a number of jobs may be waiting at any one time.

The term user interface refers to the way in which the user and the computer exchange information. In the most basic sense it might refer to how the user gives instructions when the computer is first accessed or switched on, and to how the computer responds.

It is controlled by the computer's operating system, which is loaded from its hard disk when it is started, and includes a series of utility functions which can be initiated by appropriate (shorthand) commands issued by the user. As many of these functions are concerned with file operations on a disk (deleting, running, renaming, etc.), the operating system is usually referred to as a disk operating system, or DOS.

In the days of dumb terminals the only two functions of a user interface were:

The nature of this interaction was very sequential. Lines of text would progress from top to bottom of the screen and thenceforward the display would progressively scroll up the screen as more lines were added to the bottom.

With the very fast data transfer rates which are now possible, and because a screen is controlled by just a single computer, communication between computer and screen is virtually instantaneous as far as the user is concerned. This has enabled a very rapid development of the user interface to take place, with the objective of making the use of computers a more "natural" and less specialised human activity. A recognition has grown that normal thought processes are largely based on pictorial images rather than verbalised logic. Opening up the use of computers to the majority of people depends on removing the necessity to learn even high-level programming languages, including the specialised commands of an operating system or of a piece of software.

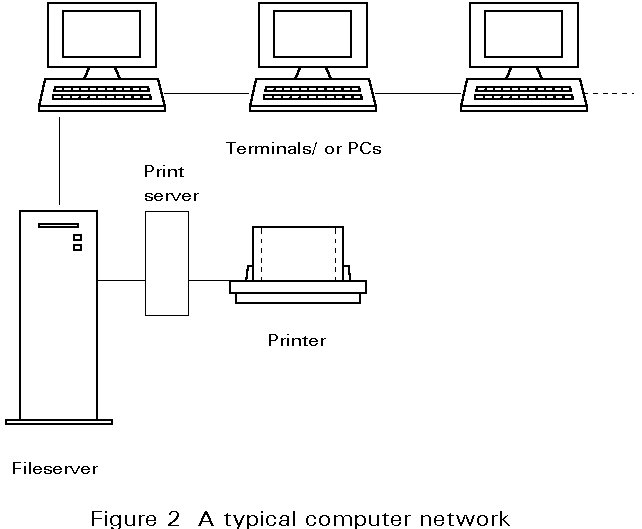

The current generation of windowing user-interfaces (Figure 3) has attempted to minimise the amount of specialist knowledge needed by users and to address the non-verbal nature of human decision-making. Their basic context is a computer screen, considered as a desktop on which a number of ledgers (windows) are placed. These ledgers contain collections of tools (programs) and documents (data files). The ledgers may be put into the background or brought forward and their contents displayed, and one ledger may be partially overlaid by another. The tools are each represented by an icon - a small picture - and a title. A pointer directly controlled by a mouse is used to select a program simply by pointing at it and clicking a button on the mouse. Once a piece of software is running it obeys the common standards of the windows interface, so that there is no new working method to be learned by the user on coming to a new software tool. The working principle is usually to minimise the use of the keyboard for decision-making (it is obviously the best tool for direct text or data entry) by using the pointer to select options using a large but standard range of visual devices on the screen. These options include pull-down menus and dialogue boxes, both of which are small screen overlays on which selections can be made, which remove themselves after the action has been taken. It is currently fashionable to make major selections by "pushing buttons" with the pointer. It is possible, while running one program in a window, to pause operation and use another application in another window. This is not true multi-tasking, since there is only one program running actively at a time, but it is possible to mix a range of tasks in a given period without completely closing down any one of them. For example, in writing a technical report it might be appropriate to keep a word-processor, a spreadsheet, a specific design or analysis program and a CAD program all open simultaneously, so that the final document can be produced as new figures, calculation results and tabular information or graphs are generated or modified. Real multi-tasking, in which a large finite element analysis, for example, could be running while more routine interactive tasks are being performed, is only available in practice on the most powerful types of workstation.

Although window interfaces make computers accessible to a very wide range of potential users, they present some difficulties for developers of software. The requirement for on-board memory is high, as is that for hard disk storage. Development of original software for windows environments is usually rather slow and time-consuming and, therefore, the economics of writing original technical programs for a restricted market is not always favourable. Conversion of well-established software running in the normal operating system environment, in such a way that it keeps its full functionality and retains the working methods which have made it popular whilst taking advantage of the common user-interface, is an even more difficult task. It is, therefore, often necessary to work within the normal keyboard-based operating system environment. On PCs this is usually MSDOS and on workstations Unix. Using a computer in these environments requires much more understanding of the functions of the operating system and how data is stored on disk. Visually the user sees a blank screen, or part of a screen, with a flashing cursor to the right of a brief prompt. In order to make the computer perform any useful task it is necessary to type in a command in the operating system's high-level language. This is less daunting than it sounds - with only a few commands in one's vocabulary and a working knowledge of the directory structuring of hard disks it is possible to work very effectively with either a personal computer or a workstation.

At the level of the processor chip very large numbers of very simple instructions are executed in order to perform even the simplest of computing tasks. The task of programming a computer in such terms is a very tedious process and is only attempted when execution speed is the very highest priority for an item of software. High-level programming languages provide an alternative means of presenting a sequence of more advanced instructions to a computer in a form reasonably comparable with ordinary language. The set of instructions (the computer program) are then translated (compiled) into machine code form comprehensible to the processor.

Any programming language has a vocabulary of functional commands and a syntax of rules. In addition, there are numerous arithmetic operators, including many of those used in conventional mathematics and the ability to use variables of many different types. The programmer prepares a list of such instructions which represents the flow of control within the program. There are numerous programming languages, nearly all of which are capable of performing most programming tasks, but each of which has a unique basic philosophy which makes it efficient in a specific field. For engineering applications FORTRAN (originally used on mainframes for batch processing) is still very widely used on account of its mathematical efficiency and its huge library of mathematical subroutines. The world's most popular language for general programming is BASIC which exists in many different forms, from the almost unstructured interpreted versions generally bundled with any type of personal computer to very advanced compiled languages with very large libraries of functions. Perhaps the most versatile and powerful general-purpose language used by professional programmers is C which includes operators which allow very easy direct access to computer memory. Other languages are used mainly in specific types of application with their own functional requirements, and it is not necessary to go into their detail here. At this time computer users do not formally need to write programs in any case, but will use software produced by professional developers over many man-years. A particular exception to this is in the context of spreadsheets, and occasionally databases, in which it may be convenient to write applications in the high-level languages which are included in these types of software.

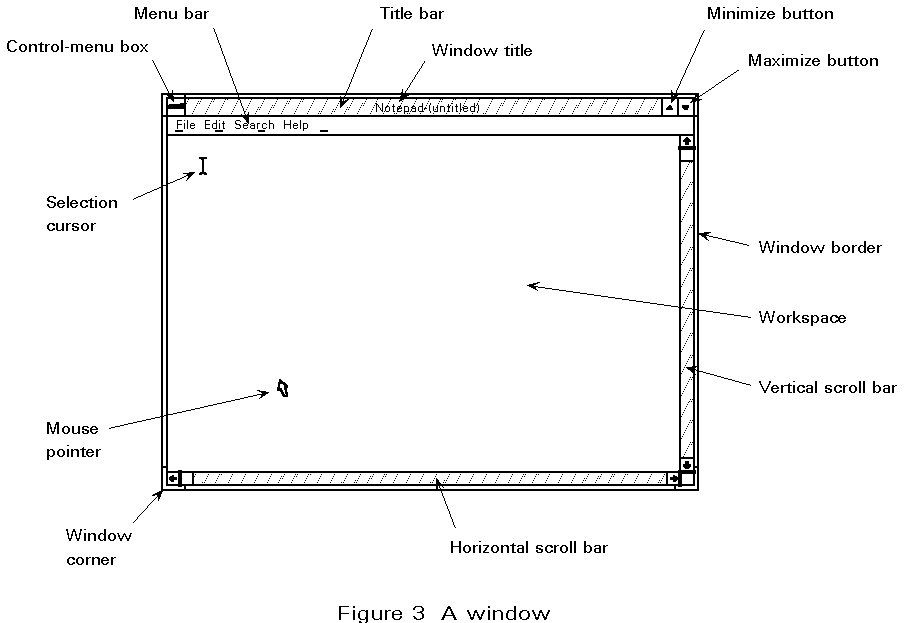

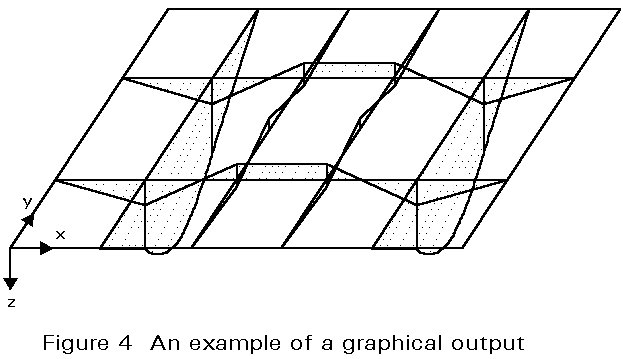

Largely because of its direct links with computational research in universities, structural analysis software has been available for a long time, initially on mainframe computers but more recently on all types of hardware. Except in the most complex analytical processes the power of modern personal computers is adequate for even the more specialised tasks needed for structural engineering. In the case of statically determinate analysis of structural components, the analysis is normally contained within the detail design software. Elastic analysis of plain frames or grillages is probably the most useful general tool for the structural designer. It now exists on personal computers in a multiplicity of different forms. The important differences between these programs tend to be more in their ease of use than in their technical capabilities; all tend to have graphical rationalisation capabilities (Figure 4), so that geometry and results can be viewed conveniently, but the processes for editing geometry and loads vary widely, as do their capabilities of interacting with design and CAD software. Nonlinear, elasto-plastic and three-dimensional frame analyses are now routinely available on personal computer, usually within general-purpose finite-element packages which derive from mainframe software developed in academic research. These packages, although useful for checking stresses, deflections and dynamic motions in very complex cases, tend to be over-specified for most structural design problems, require very large amounts of data to be defined and often produce far more output than is necessary. Their use is more appropriate as a final validation of a design than in the earlier stages when the analysis is being used often as part of the member selection process.

Structural design software is a much more recent phenomenon, since it relies very heavily on interaction with the design engineer and only started to become widespread when microcomputers began to flourish in the early 1980s. Much structural design involves relatively simple calculations - standard loading calculations, analysis and element sizing based on rules embodied in codes of practice. These calculations have traditionally been performed by hand, but interactive computing now enables designers to take advantage of the power of the computer without relinquishing control over design decisions. Design software relieves the designer of the tedium of laborious manual calculations - in many cases a degree of 'optimisation' is incorporated within the program, but decisions about selecting the most appropriate individual member sizes remain with the designer. Design software now reaches into nearly all areas, but is very variable in its nature, style and quality. The best allows considerable flexibility in use, making revisions to existing designs easy and allowing data to be exchanged with software for analysis, CAD and modelling and for estimating quantities.

In the context of steel structure design, the material available starts with "free disks" provided by manufacturers of cold-formed products such as sheeting, composite decking and purlins, which effectively provide quick look-up tables for safe working loads and spans against key dimensions. Element design to various codes includes beams (both steel and composite), columns and beam-columns, and connections of various kinds. Whilst element design usually takes the form of free-standing executable programs the power of present-day spreadsheet software is such that applications for standard spreadsheets can provide a very flexible way of automating these fairly straightforward design processes, with good links to other standard software. Plastic design of steel frames, particularly low-rise frames such as portals, is available in different degrees of sophistication in terms of its convenience in use, links to downstream software and CAD, and in the order of analysis it offers. Plastic design is one area where different degrees of analytical capability provide different orders of realism in results; the more non-linear analysis, which allows development of plastic zones, can produce distinctly lower load resistances than the rigid-plastic and elastic-plastic versions.

Perhaps the most important thing to appreciate about design software is that different ways of working will be convenient for different design environments. A steel fabricator with a large commitment to design-and-build will really need an integrated system, preferably based on a 3-D modeller, in which it is easy to handle large numbers of members, to standardise sizes and connections, to make rapid revisions, and to produce accurate costing and fabrication data. A small firm of general consulting engineers, on the other hand, may find it more convenient to keep a fairly extensive library of free-standing design programs with an easily understood user-interface, so that basic member sizing and presentation calculations for building control approval can be done reliably and without a significant re-learning process when the software is occasionally used.

The development of interactive graphs at about the beginning of the 1970s provided the opportunity for using computers for draughting. These early systems used mainframe computers with graphics terminals ("green" screens) and provided three-dimensional draughting capabilities. Initially this was limited in use to heavy manufacturing industry, particularly in the production of aircraft, ships and motor cars, where the benefits of 'mass' production justified the enormous investment then required for CAD. Even in those pioneering days, the output from the CAD systems was providing automatic bills of quantities and also being linked into numerically controlled (NC) machines, thus improving manufacturing efficiency.

In the late 1970s the development of 'super mini' computers was a significant factor in a very large growth in the use of CAD. They provided a single-user facility and can be referred to as 'personal designers'. Application was still concentrated in the production-based industries, but with increasing use of relatively cheap, unsophisticated, two-dimensional systems in the construction industry. These personal designers were difficult to learn and use, largely because they were not developed with the end-user in mind. User interfaces, which were not standardised, generally took the form of a command line with complex syntax. The capabilities typically replicated those of conventional draughting processes and often provided little additional intelligence. For instance, it was often possible to change the numerical value of a dimension without the drawn length changing, and without appropriate warning messages. Some simple systems still allow this. The advantages of this type of CAD are very limited - essentially the ease of revising a drawing and replotting. Time to produce the original drawing might often be as much or more than producing the same drawing at a conventional drawing-board.

More sophisticated features have rapidly been introduced, offering greater advantages. The advantages start with improved geometrical constructions such as:

These facilities are now fairly typical in professional personal computer CAD tools. Increased intelligence has been introduced into the way elements are represented, for instance, in according specific relationships between drawn elements. There is, however, a penalty to be paid for storing data in an intelligent form, since:

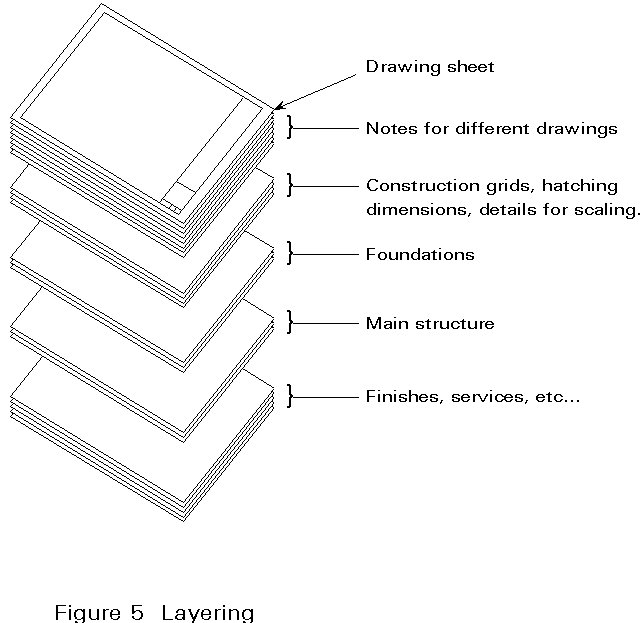

Two-dimensional draughting systems still have a role in the production of general arrangement drawings, traditionally the responsibility of the consulting engineer. Unless the system is to be used subsequently to produce detail drawings which are normally the fabricator's responsibility, there is no real advantage to be gained for this kind of user by using the three-dimensional structural modelling approach. A standard 2D system also allows easy interaction with architects and building services engineers. It also enables the integration of different parts of the civil and structural engineering design work via simple layering. Drawings, or parts of drawings, are easily copied directly into word processing packages for report writing. It may also be possible, in future, for the 2D system to act as a partial pre-processor for full structural modelling.

Three-dimensional CAD systems can vary from a simple wire-frame model which operates on lines only, through surface modelling to complete solid modelling which requires comprehensive data definition and relationships but offers enormous potential.

Simple three-dimensional systems offer little advantage over 2D CAD for the construction industry. However, the development of specialised forms of modelling system provides enormous power with direct relevance to steelwork fabrication (including detail design). In this context, the 3D solid model is a means of representing the complete structure, as distinct from conventional CAD where individual elements are merely drawn as flat shapes. This provides a complete description of the steelwork, including connections from which all necessary fabrication and erection information can be extracted automatically. The model is typically created in a manner similar to the design sequence itself, coarsely defined at the start, with progressively more detail added as appropriate.

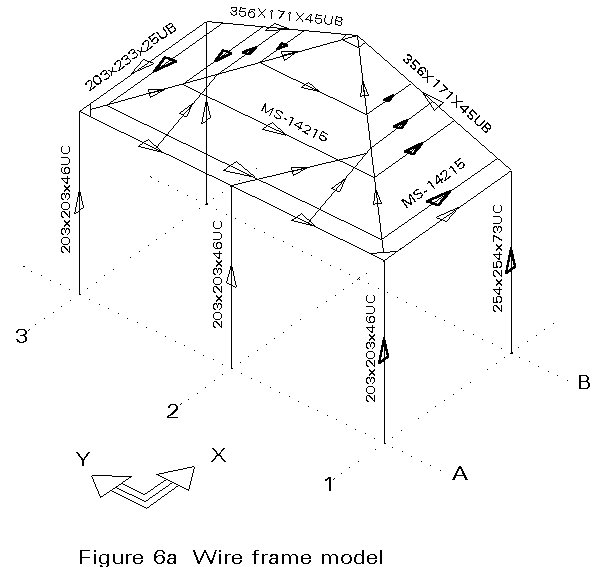

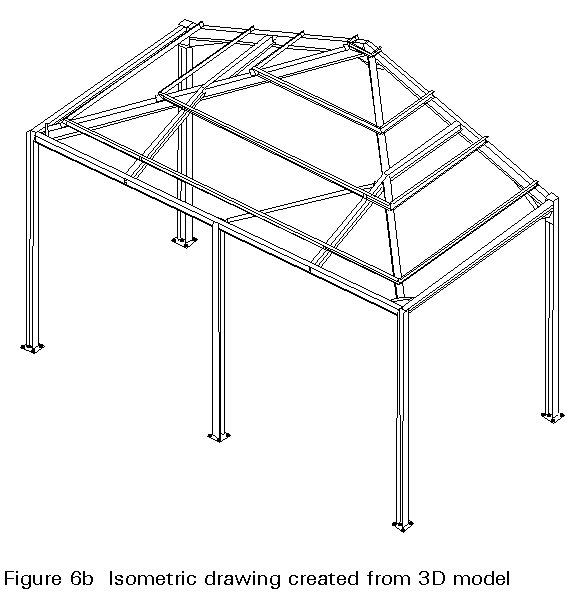

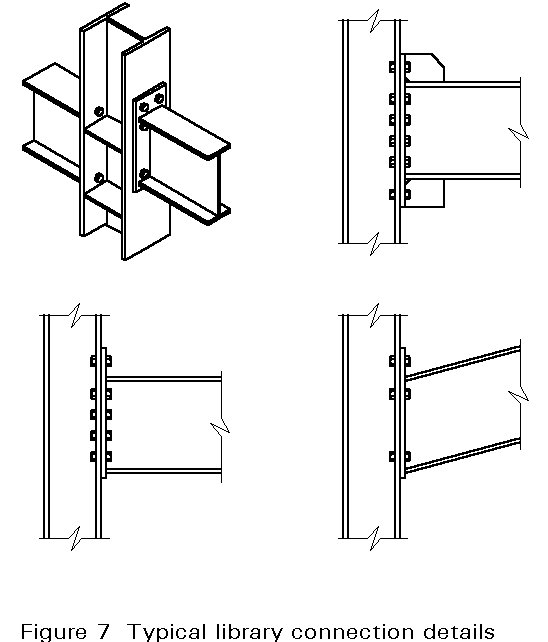

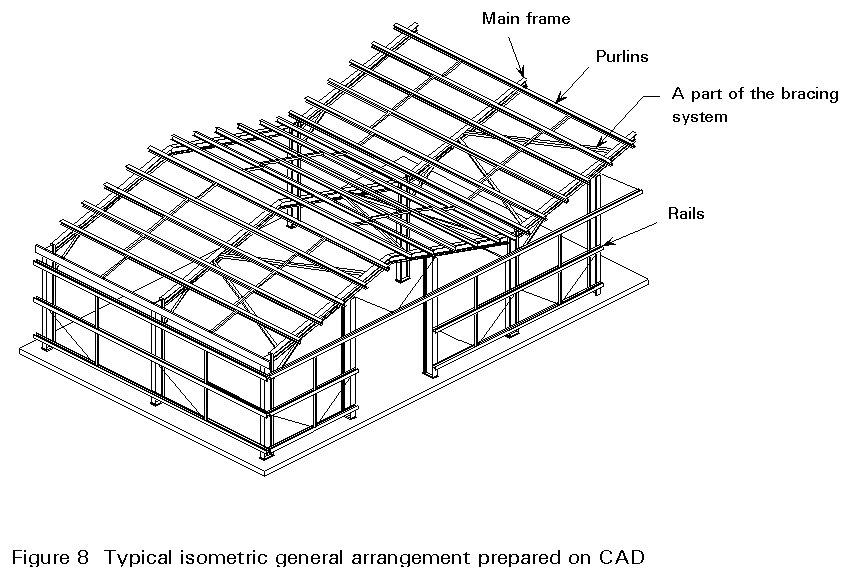

Initially the structural layout is defined using a wire frame model (Figure 6a). This can be done with the aid of a 3D framework of grid lines and datum levels and corresponds to the general arrangement produced by the architect or consulting engineer. With 3D modelling, it is also possible at this stage to generate more detailed engineering drawings, including isometric views (Figure 6b). Information regarding section sizes, geometric offsets and additional data such as end reactions from design calculations can all be entered very easily. The fabricator's next responsibility is to design connection details. Detail design is facilitated by using a library of standard connection types (which can be tailored to suit the needs of individual companies or clients) which will scale automatically to account for the members to be connected (Figure 7). Appropriate detailed calculations can also be performed according to accepted design rules and based on the end reactions prescribed when setting up the wire frame model. Where non-standard connections are required, interactive modelling facilities exist for constructing the appropriate details, either from first principles or by modifying standard forms. These can be added subsequently to the library for future use.

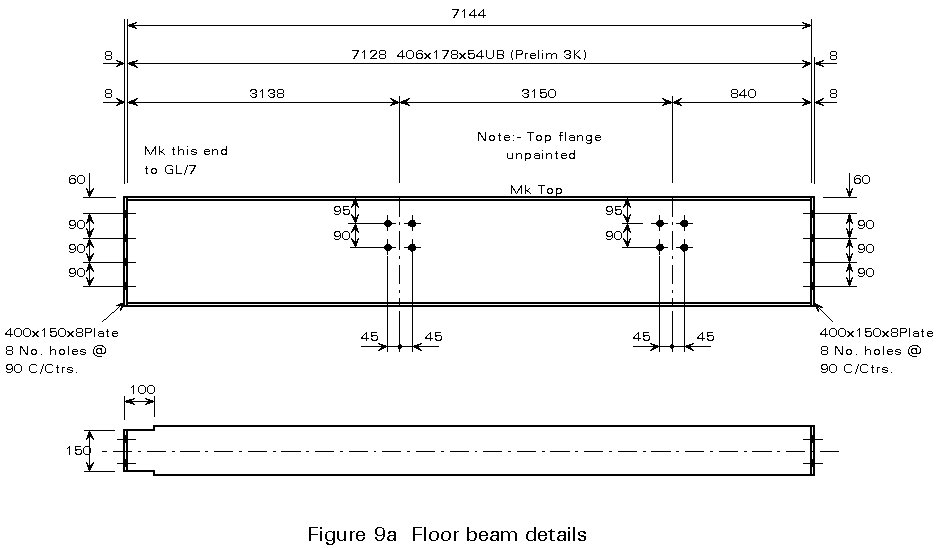

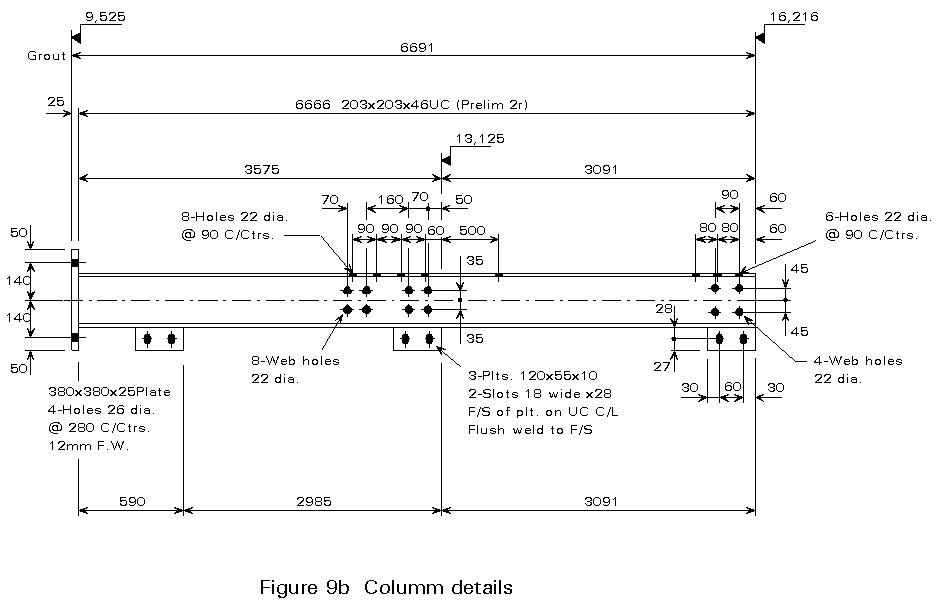

The definition of a 3D model in this way contains a complete geometrical and topological description of the structure, including all vertices, edges and surfaces of each physical piece of steel. As a result all element dimensions are automatically tested for compatibility, and clashes which can easily carry through in the traditional processes are removed. The model allows the efficient generation of conventional drawing information, including general arrangement drawings (plans, elevations, sections, foundations, isometric views - Figure 8), full shop fabrication details for all members, assemblies and fittings (Figures 9a and 9b), and calculation of surface areas and volumes for all steelwork. Further benefits of such systems are related to the links which can be established with other parts of the production process. Full size templates can be drawn, e.g. for gusset plates, and wrap-around templates for tubes. Erection drawings can be output and material lists (including details of cutting, assembly, parts, bolts, etc. produced automatically. An interface to a management information system can also facilitate stock control, estimating, accounting, etc. Potentially of greatest importance is the possibility of downloading data directly to Numerically Controlled (NC) fabrication machinery, automating much of the fabricating work itself. At this level, 3D modelling is the central controlling tool for an integrated steel fabrication works in which the total design-and-build package is offered.

In more general terms, surface modelling provides additional information about a 3D model. At its simplest, but probably most cumbersome, this can take the form of defining boundaries within which there is a surface with specified characteristics. More sophisticated surface modelling techniques, such as rubber surfacing which allows a surface to be stretched and squeezed into shape, are not directly relevant to most construction work, but are particularly valuable where shell forms are being developed, e.g. for motor car body design and manufacture. It may be that developments in steelwork modelling of the type described above will allow a convenient way of integrating the skeletal models with surface models of the building envelope and architectural visualisation models, but at the time of writing this is not yet a reality.

The general arrangement drawings have typically provided the basis for a Bill of Quantities used for tendering. Preparation of a Bill requires the weight of steelwork in different parts of the structure to be calculated, including an allowance for attachments and connections, and a brief description of the operations required for fabrication and erection. The specification, which may be in a largely standardised form, provides additional information, e.g. regarding the corrosion protection system to be applied. The Bill of Quantities is traditionally prepared by hand. However, if a suitable 3D modeller is used, the output can form the basis of the Bill, with quantities called off automatically. This technique not only avoids time spent on tedious calculation, but also minimises the potential for errors in the quantities. As part of the steelwork detail drawings, each item is given a unique reference number. This number is used to identify each workpiece in the subsequent fabrication and erection operations and also serves as the basis for a materials list which is issued for ordering stock and planning production.

In a design-and-build contract, a formal Bill of Quantities is not used. Instead the steelwork contractor must estimate a lump sum on the basis of experience and preliminary calculations. When the contract is awarded, the fabricator produces the design calculations and general arrangement of drawings. Preliminary buying lists for purchasing stock from the steel mills or stockholders are then required and the sequence of operations follows a similar route to the more traditional method of procurement. In this environment also, it is clear that use of a suitable 3D modeller can enhance the accuracy of estimation of quantities, even before a complete detailed solid model exists.

Traditional methods of preparing steelwork elements for construction - cutting to length, drilling, making attachments (cleats, brackets, etc.) and assembling sub-frames (e.g. trusses) were labour-intensive, and based on precise information on the steelwork detail drawings. Measurements and marking were performed manually using templates, typically of timber construction, for repetitive or complicated details. Appropriate machine tools (saws, drills, etc.) would be aligned visually and each operation performed in sequence, with the workpiece being transported between individual items of equipment. Subframes were typically put together on a laying-out floor on which the form of the geometry had been marked using traditional setting-out methods.

The introduction of NC machines has enabled preparation details such as overall length and position of holes to be defined numerically via a computer console. Handling equipment automatically positions the workpiece in relation to the machine tool, which performs the necessary operations. In this way, the labour-intensive operations of marking, positioning and preparation are integrated into a single process which leads to major improvements in fabrication efficiency, especially where fairly standard or repetitive operations are concerned. Even greater efficiency can be achieved by transferring the necessary information on machining directly from the steelwork modeller into the NC machines rather than by transcribing it manually from drawings or paper specifications. This process requires a computer modeller which is capable of providing the machining operations data in a suitable form. The data can then be transferred either by writing to a floppy disk which can then be read by the NC machine, or via a direct network connection between the machine and the CAD workstation. At the time of writing only a minority of fabrication plants have complete computer-integration in this way because of incompatibilities between computing hardware and machine tools, but this integration is clearly capable of providing much greater efficiency and higher quality than the present semi-manual process.

Predicting future developments in computing is notoriously hazardous. However, the trend of increasing power of computers with little or no increase in cost shows no sign of slowing down, suggesting that the application of computing is likely to spread even further. Applications, which currently require excessive amounts of processing making them impractical, will become feasible. The evolution of graphical user interfaces appears to have reached a plateau, but the application of graphics may well become much wider, with "virtual reality" applications, for instance, allowing the structural designer as well as the architect a realistic visualisation of new developments. This application has already been used in demonstration form for a small number of new constructions.

Routine design calculations may become more sophisticated, possibly allowing more adventurous design solutions, but there is a danger that the designer may become over-reliant on the processing power of the computer. A simple understanding of general structural behaviour is still essential. There may be a temptation to use over-elaborate methods of analysis and design, and the engineer should always consider whether these are appropriate, particularly bearing in mind unavoidable uncertainties regarding design loads, material strengths, etc. There is also a danger of refining designs to an excessive degree in an attempt to optimise structural efficiency. For example, a structure in which every steelwork element has been designed for minimum weight will result in the lowest overall tonnage, but almost certainly at the expense of increased fabrication and erection costs.

Some aspects of steel design, for instance fire resistance, have traditionally been treated in an over-simplified fashion and increased computer usage will rightly allow more rational approaches to become more commonly considered as part of the design calculations. Other aspects of structural behaviour have often simply been ignored. Dynamic analysis, for instance, is a specialist topic which the designer may be called on increasingly to look at in detail, and again the integrated computer model could enable this to be done painlessly as far the design is concerned. Increasingly, the designer will create an intelligent model of the structure and expose it to a number of design scenarios, observing and interpreting the responses. In this respect graphics is again likely to become prominent, with visualisation of behaviour rather than the presentation of lists of numerical results requiring careful interpretation.

In building forms where complex geometry is involved, such as the International Terminal at Waterloo, the use of conventional draughting methods would have been almost impossible. In this case a 3D modeller was used to set up the geometry of a single bay of the three-pinned trussed-arch system. This acted as the starting point for the whole roof and also facilitated the setting-out on site, with a number of targets attached to each arch which could then be positioned on site using precise three-dimensional co-ordinates and conventional electronic distance measuring equipment.

Integration of computers into each of the different stages of design and construction will not only lead to improved efficiency with data automatically carried forward, but it will also extend computing into areas which might be regarded as trivial. If the scheme design involves the creation of a simple 3D wire-frame model of the structure, then loading calculations become almost automatic. Whilst this is not a difficult part of engineering design calculations, it is somewhat tedious and automatic load assessment would result in valuable time-savings. It is possible that eventually expert systems, which have so far had limited success in structural engineering, may be of use at the concept stage and in integrating the structural form with services and building-use requirements.

These developments, which all depend on the establishment of a common, universal database structure, will allow information about a structure to be shared between different applications, so that a change in data as a result of one process automatically feeds through to other dependent processes to ensure consistency. Object-orientated programming concepts and relational databases provide the vehicles for these developments. It has been seen that the 3D modeller is already being linked to fabrication machinery and to other aspects of the whole building. This linking is likely to become more common as standard data structures are established and fabricators exploit the improvements in efficiency which integration offers. The linking can be extended through to site planning, allowing more precise control over component delivery and operations, where even greater improvements in efficiency could be realised. Integration is also likely to be extended to non-structural areas with, for instance, analysis of energy requirements, day lighting, etc. all being integrated and making use of a central database.